To make the most of transforming from a physical to a virtual network architecture operators need to avoid numerous bear traps. Jeremy Cowan asks industry experts where CSPs may feel the worst pain.

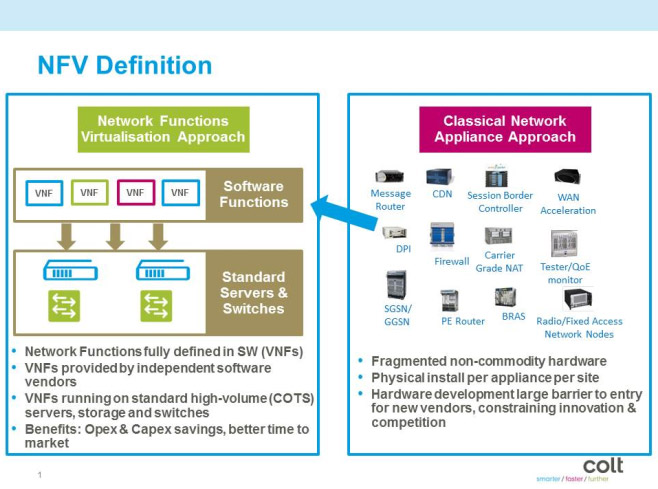

Tony Poulos, Enterprise Business Assurance Market Strategist, WeDo Technologies, was clear where the likely pain points for communication service providers (CSPs) migrating to network function virtualisation (NFV) will be. “Consolidating vendor-specific equipment types to standard high-volume commercial off-the-shelf (COTS) hardware will be a challenge. Hardware will not be purpose-built like much of the existing appliances that are dedicated to performing a single function within the network. This presents the  possibility of malware introduction and potentially less than optimal performance.”

possibility of malware introduction and potentially less than optimal performance.”

Network functions that now consist of software from multiple vendors will need to be co-ordinated by CSPs and monitored, says Poulos. Being able to quickly introduce and operationalise new services while keeping costs under control will be the objective and key to their success.

Guy Daley, director and CTO of Product Management, Cisco, puts it even more succinctly. The pain points for CSPs migrating to NFV are co-existence of PNFs and VNFs (physical and virtual network functions) as well as integration, “integration of existing OSS and management systems, and a lack of published standards – the framework is not quite standardised, and is subject to interpretation.”

Get the right design

Javier Benitez, senior network architect at Colt Technology Services, believes that, “In the current state of NFV, where many vendor products are still in beta and reference architectures are still being discussed and agreed by the industry, one of the most common pain points for CSPs is coming up with the right design / architecture. As operators try to build a real solution they need to closely manage the situation. It has to be assumed that mistakes will be made and there is a need to limit the scope of initial deployments.”

“In addition, with there being a need for IT and Network teams to work closer together, there may be a requirement to reorganise team structures and processes. Both teams need to be on the same page and combined should have full management of all services offered in order to make an NFV model work,” he added. “Network operations will dramatically change to a new world where network services run in a cloud environment, requiring organisational transformation.”

“In addition, with there being a need for IT and Network teams to work closer together, there may be a requirement to reorganise team structures and processes. Both teams need to be on the same page and combined should have full management of all services offered in order to make an NFV model work,” he added. “Network operations will dramatically change to a new world where network services run in a cloud environment, requiring organisational transformation.”

This is something with which Barry G. Hill, global head, NFV, Oracle clearly agrees. “Pain point number one, he says, “might be the cultural changes that will have to be part of the NFV evolution. The new ways in which networks will have to be pieced together and then deployed and managed will affect many people in what traditionally have been very slow-moving, bureaucratic environments. When trying to keep up with competitors such as Facebook, Amazon and Apple, the culture will have to not only embrace constant change, but also start thinking about how to drive that change.”

Benitez believes that even though the long-term benefits of NFV are clear, CSPs need to be ready in the short term for extra expense as they manage the learning curve at all levels. New skills require investment and as changes to network functionality take place, CSPs will need to acquire new skills in both the operations and engineering teams. (This is an area we will cover in Part 4 of this series of articles. ED.)

According to Shaul Rozen, director of Product Strategy at Amdocs, from a technology point of view, one of the key challenges is that NFV technology will mature over time. “Although many vendors currently have components of the NFV stack, there seem to be minimal viable products and they do not address the need to manage the full range of inherent complexities, from the infrastructure up to the user-defined experience. Also, the technology still needs to prove that it adheres to the security, performance and reliability of telco-grade requirements at scale (including the NFV Infrastructure such as that provided today from OpenStack).”

“The complexities of frictionless Virtual Network Function (VNF) onboarding and master alarm management are still evolving to a more optimal level. At the same time, standardisation is lagging and many network function providers use proprietary systems to manage their virtual network functions, which create difficulties in realising the open environment that is required for NFV. Service providers will need to manage the coexistence of physical and virtual networks and services that span both. This means integration at the process and technology level,” says Rosen.

“One such example is the need to keep a near real-time physical resource inventory to support NFV needs. Last but not least,” he says. “There is a risk that NFV may increase management complexity rather than reduce it.”

In addition, new service planning processes will need to change to support more rapid service creation; this will probably require new organisational processes, organisational structure changes, and even cultural changes. Rosen warns that, “Carriers who truly want to transform the way they do business – not just the technology they use to do it – will have to go through a true change management process. Finally, the vendor ecosystem and accountability will differ from current practices.”

Change of mindset

NFV sometimes requires a change of mindset about solution architecture, says Gary McKenzie, senior architect at Peer 1 Hosting, and the more transient nature of NFV makes licensing more complex than for physical devices. That said, he believes the increasing availability of usage-based billing can turn that into a positive as you only pay for what is in use right now, and spare hardware isn’t depreciating while it sits in stock.

NFV sometimes requires a change of mindset about solution architecture, says Gary McKenzie, senior architect at Peer 1 Hosting, and the more transient nature of NFV makes licensing more complex than for physical devices. That said, he believes the increasing availability of usage-based billing can turn that into a positive as you only pay for what is in use right now, and spare hardware isn’t depreciating while it sits in stock.

The other pain point, of course says McKenzie, is that “the centralisation of many devices on a single NFV cluster creates potentially very large failure domains if something goes wrong. You need to be rigorous in your failure testing and infrastructure design to reduce the risks here.”

It is the disparate and distributed nature of networks, the different management interfaces and APIs, and the multiple management domains involved in service delivery creating management and orchestration challenges, that most concern Rob Marson, VP Marketing, Nakina Systems. “A consistent approach to mediating and harmonising management access will be needed in order to abstract the complexity of the underlying physical network functions, virtual networks functions and virtual network infrastructure from OSS and orchestration systems,” he insists.

“Assuring network data integrity will become increasingly vital and challenging. More autonomous changes in the networks create higher probability for network data inconsistency. Discrepancies between what a service provider believes is in the network and what the actual configuration is can range from 20% to as high as 80%. These challenges are exacerbated with the transformation to NFV – more network functions and more configurable parameters at every layer (i.e. within VNFs and NFVI),” says Marson.

He adds that accurate data is crucial for efficient network operations, workflow automation, cost optimisation, and revenue assurance. Service providers must eliminate data inaccuracies in OSS, BSS and orchestration systems and correct discrepancies in network configurations. Continuous and automated network integrity audit processes are essential to align inventory and network data. Network functions and virtual network infrastructures provide more configurable parameters, increasing the likelihood of mismatches leading to service impacts.

Marson also cautions that new security vulnerabilities are introduced as the attack surface dramatically expands. The common cloud infrastructure could result in hypervisor vulnerabilities. Virtual Machine, guest OS, or VNF manipulation could compromise the integrity of the hypervisor. It will be important that logging and monitoring of hypervisor activities be performed. Similarly, it will be important that VNF configurations themselves are audited to understand whether configuration or operating system changes may have an impact to security integrity. Security access management strategies, for both people and autonomous systems, will be crucial to protect physical and virtual network functions, and network infrastructure from inadvertent or malicious attacks.

Sameh Yamany, CTO and VP of Mobile Assurance & Analytics at JDSU, agrees that major changes required to OSS or BSS and NSE tools. He thinks a hybrid strategy will be helpful, with NFV initially being introduced in ‘islands’ (of the same NE type) such that hybrid networks will exist. Yamany says, “this will be both a ‘stepping stone’ and a complication to be managed.” (We will also come back to hybrid solutions later in this article series. ED.)

Sameh Yamany, CTO and VP of Mobile Assurance & Analytics at JDSU, agrees that major changes required to OSS or BSS and NSE tools. He thinks a hybrid strategy will be helpful, with NFV initially being introduced in ‘islands’ (of the same NE type) such that hybrid networks will exist. Yamany says, “this will be both a ‘stepping stone’ and a complication to be managed.” (We will also come back to hybrid solutions later in this article series. ED.)

As to other challenges, Yamany believes a new skill mix will be required in operations. “Major integration platforms will be required to support the phased NFV approach. Maintaining, or hopefully improving, current customer quality of experience levels over hybrid physical and NFV networks,” will also be difficult.

Of course, operators cannot simply shut down the current network, says Ben Parker, principal technologist at Guavus. “Virtual components must be integrated into it without causing disruption. The virtual network will have different management requirements, the operational skillsets will be different, and the failure modalities will be different. This will require the operator to employ new tools, train staff, hire new people, and in some cases virtualisation will result in a net reduction in personnel. All of these factors must be considered prior to deploying the virtual network infrastructure.”

Cost benefit

Another major concern for Parker are the traditional ways in which networks are deployed. “Traditionally, the hardware and software all had to be 99.999% reliable. In the virtual environment, it is expected that a service can be even more reliable, while running on hardware that is only 99% reliable. To do this, analytics software should be used to perform predictive analysis on data coming from the network, hardware and software to identify future failures. This function will allow the service quality to be managed out of band and, therefore, more accurately and lower cost than doing it on an application-by-application basis.”

Inflexibility and reliability are also on Barry G. Hill’s mind at Oracle. “Another pain point could be legacy OSS/BSS, since the two main benefits most operators associate with NFV are service agility and flexibility — not words that have characterised older, less flexible OSS/BSS systems,” he says. “In order to provide useful insights into the networks and services, management systems will have to accommodate new approaches to activation and provisioning, and accommodate new entities like NFV orchestrators and other NFV-related tools. Networks have been built for five-nines reliability, which often means they aren’t easy to change; hardware changes can indeed cause a ripple effect of management and operational issues, so operators will be very vigilant in assessing what hardware will be virtualised and how.”

Inflexibility and reliability are also on Barry G. Hill’s mind at Oracle. “Another pain point could be legacy OSS/BSS, since the two main benefits most operators associate with NFV are service agility and flexibility — not words that have characterised older, less flexible OSS/BSS systems,” he says. “In order to provide useful insights into the networks and services, management systems will have to accommodate new approaches to activation and provisioning, and accommodate new entities like NFV orchestrators and other NFV-related tools. Networks have been built for five-nines reliability, which often means they aren’t easy to change; hardware changes can indeed cause a ripple effect of management and operational issues, so operators will be very vigilant in assessing what hardware will be virtualised and how.”

Last but not least, says Hill, no one wants to take chances with their data centres. So, finding the best way to connect data centres and data centre components — including cloud resources and colocation racks — to the virtualised architecture will be critical. Operators will pick the path that ensures visibility into how virtual network function failures affect services; they don’t want to lose that visibility or the ability to react quickly.

There’s a cost attached to such deployments, as Gerry Donohoe, director of Solutions Engineering at Openet, reminds us. From a commercial perspective, he believes CSPs must ensure that deployment costs adapt to elastically scalable virtualised solutions. “For example,” he says, “any licenses tied to physical hardware or peak throughput will limit business agility. Furthermore, when a multivendor ecosystem is considered, careful management of SLAs (service level agreements) will be needed to avoid the potential of vendor finger pointing.”

“Meanwhile, from a technical perspective it is vital that a CSP understands the performance limitations of commercial off-the-shelf (COTS) hardware and has ensured its vendors’ solutions are optimised regarding hypervisor, media and signalling capabilities,” Donohoe concludes. “A CSP must also understand how virtualised applications can be sufficiently resilient to both hardware and software failure and outages.”

“Discrepancies between what a service provider believes is in the network and the actual configuration can range from 20% to 80%.” Rob Marson, Nakina Systems

Comment here or on Twitter: @jcvplus OR @VanillaPlusmag

Next time: How to avoid NFV transformation pains — Part 3: The importance of Orchestration